Welcome to Nicolas Vizerie website !I am a C++ developer interested in games and computer graphics. |

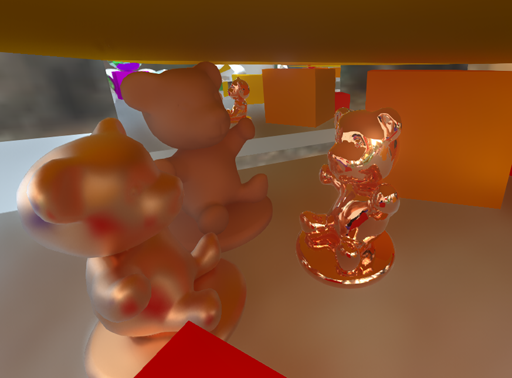

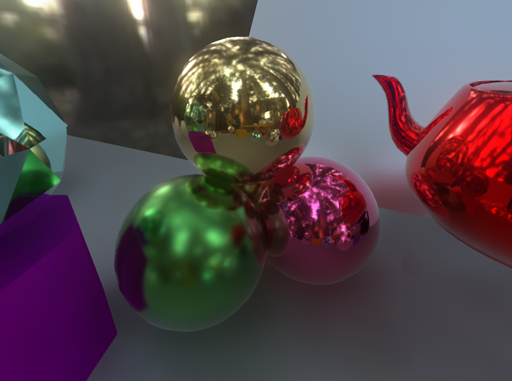

I have not posted a demo in a long time, and found some occasion to do some new stuffs. This is a simple path tracing demo, it should work on any DX11 GPU, but it requires a fast one, like a nVidia 1080 and it isn't optimized at all yet. Most of the code was done on late 2013, when I wrote a KDTree builder in compute shader, but never had the time to complete it. Once a raytracing primitive is available in a shader it become easier to do some simple raytracing experiment even without a graphic card with raytracing support. In the demo there are 3 ray bounces + 1 primary ray (The result is biased for now), and a simple denoising shader, that can certainly be improved. Of course, because this demo do not use raytracing acceleration through DXR (DX12 would be required for that), it is not representative of the speed that could be achieved with it. For now, most of the GPU cycles go into the 'software' raytracing, implemented as a compute shader.

The whole demo uses the GGX BRDF with some simple fresnel.

I'm aware that some artifacts remains (some motion vectors can get wrong resulting in, spots / acne) and hope to find the occasion to fix them :)Demo downloadable here

Youtube video #2 here

Youtube video #1 here (old video)

Please note that this demo is independant from my workplace, and I wanted to thank them for allowing this publication :)

Version history

Version history

There is now a new section available, where miscellanous tools and utility will be added. Please check it out here.

MLAA (MorphoLogical AntiAliasing) is a recent technique developped by Intel, that apply antialiasing on a image

by using a shape recognition strategy, after edge detection has been performed on the image. It can compete

with MSAA quality-wise.

The original paper is here : http://visual-computing.intel-research.net/publications/papers/2009/mlaa/mlaa.pdf

The original technique is not very suitable to GPU with pixel shaders alone, so some adaptation was needed.

The reason is that the algorithm scans edges and patches pixel based on the edge length, and the configuration at edge extremities (to sum up).

Edges extremities can be far from the current pixel, so using a pixel shader (pure parallel model) requires each pixel to recompute the distance from itself

to the edge extremities. For an edge of length N, the complexity becomes O(Nē), which can lead to performance problems.

The obvious solution is to compute a bilateral distance texture.

The algorithm in this work unfolds as follow :

- Detect edges of the image based on color difference (could be also Z and Normal deltas if 3D datas are available, this can make

a huge difference in quality), store in a R a boolean to indicate horizontal edges, in G another boolean to indicate vertical edges.

A rgb565 texture is well suited for this. During this operation, set stencil to 1 where edge was found (using a pixel discard)

- scan edges in cardinal directions, until edges end, or an orthogonal edge is found. Do this up to 4 pixel (only for pixel with

stencil = 1, for speedup). store the distance in a RGBA8 texture (each component for a cardinal direction)

- for each direction, propagate distance. if D(x) is the current distance for pixel x (which can be 4 at most for now).

Update the distance 4 time, by doing D'(x) <- D(x) + D(x + D(x)).

The max distance is now 16. If the initial distance was < 3, propagation was complete, and nothing had to be done.

- repeat previous step : the max distance is now 64, unless initial distance is less than 16 (no-op in this case)

- repeat previous step : the max distance is now 255 (max distance that can be stored in a byte), unless initial distance is less than 64 (no-op in this case)

- perform final blend (see mlaa.ps in the .zip for details)

As hinted by www.iryokufx.com/mlaa , bilinear filtering can be use to speed up things. I used it during edge scaning (to test 2 edges in a single

texture fetch, and in final blend)

I borrowed the idea to encode distance as a RGBA8 texture from there (I was initially going to use a float16 texture) : http://igm.univ-mlv.fr/~biri/mlaa-gpu/MLAAGPU.pdf, though the idea

to use a bilateral texture do not come from this paper. I also tried to use a look up table to encode blend weight as they did, but in my case

it was slower (I guess this is because the ALU/TEX ratio on my GPU must be higher).

On a nVidia 8700MGT, for a 800x600 image the time to process is 6,3 ms. I guess a desktop GPU would do much better.

Executable + shaders can be found here

Lately I tried to reproduce the lightmap technique that can be seen in the UDK. This technique encodes the distance of occluders boundaries in a Luminance8 texture, instead of storing the shadow term directly. A simple MAD operation is then used in the pixel shader to retrieve the shadow value from the interpolated distance (so it is essentially free compared to standard shadow masks). In my attempt I used ray tracing to computed the distance of occluder as seen from the receiver. As can be seen on the following screenshots, the accuracy is much better than with usual shadow mask. This is very similar to what Valve did in Half-Life 2 with their vector textures : Improved Alpha-Tested Magnification for Vector Textures and Special Effects

With everyone doing SSAO these days I decided to give it a try too. I developped an extension of the algorithm, that shows how to add high frequency details to the ambient occlusion term. The technique uses 3 color components to store the occlusion over each third of the hemisphere for each sampling position (whereas standard SSAO samples over a 'whole' hemisphere for each pixel in screen space). Each component is then blurred using a 'bilateral filter' as usual. In this work I did a separate edge filter pass before the blur. In the end, the normal map is read, and occlusion is computed by using a weighting of the normal with respect with each sampling direction. This is very similar to the source shading, but applied to screen space. The executable also has additionnal features such as diffuse bleeding. The technique can somewhat enhance environments where baking of the lighting is not possible (dynamic or/and huge worlds ...). The aim of the sample is to demonstates the visual enhancement that normal maps brings, but speed-wise it can certainly be improved :).

This sample requires a recent DirectX 9.0c runtime and a Shader 3.0 capable GPU. It was tested on a nVidia 8700M GT GPU and 8800.

The "Diffuse Bleeding" option was inspired by the following article : Approximating Dynamic Global Illumination in Image Space.

Please see the README.TXT file in the archive for more infos about the implementation.

Lucy model courtesy of Standford University

Thanks to Lionel Vizerie for additionnal testing!

A small demo that demonstrates "volumetric lighting". A shadow map as well as a "gobo" texture are sampled along each view ray in the pixel shader to produce the final color for each pixel. Two techniques are demonstrated. In the first one, dynamic branching is used to know when to stop the sampling. The second technique doesn't rely on dynamic branching, but use occlusion queries and multipass with stencil test to now when to stop the rendering. When hardware shadow maps are activated, percentage-closer filtering is used, which lead to a better quality.

You need a (fast...) GPU supporting Pixel Shaders model 3.0 to run the demo (I tested it on a nVidia 6800 and a 8700M GT, don't know if it works with ATI GPUs ...) The pixel shader is very costly so, it may be necessary to reduce the window size to get something smooth on older GPUs ...

This sample demonstrates the "normal mapped radiosity" technique (also known as "directionnal lightmaps") presented by Valve Software for the Source engine. It is based on the following paper : "Half Life 2 / Source shading". I found it interesting, so I decided to try to code it. Here's my try at implementing what is presented in the article.The sample introduce a slight variation : the lightmaps contain only "indirect lighting", instead of full lighting. This allow to retain shadows and specular for each light, instead of using probes and cubemaps. To achieve this I used the baking system I developed earlier for lightmasks (per-light static shadows mask), and extended it to include this new, better ambient lighting. The result is of course, slower, because it is using multipass lighting rendering instead of a single pass shader (with modulated shadows), but because of the new ambient term, "fill-lights" are less necessary, so it is possible to have each surface hit by one light at most, and still have a good overall lighting, and thus single-pass rendering everywhere if necessary (there are some overlapping lights in the demo, so it is not a single pass render). The program allows to see how using "normal mapped indirect lighting" as the ambient component in a scene can enhance the realism of the rendering. To demonstrate this, sliders allow to control the amount of ambient lighting on static geometry and dynamic geometry. For dynamic geometry I implemented radiance sampling on a regular grid, as described in the paper. Dynamic objects lookup the "light flow" at their center in this grid.

You need a GPU supporting Pixel Shader 2.0 to run the demo (ATI Radeon 9500 or more, nVidia Geforce FX or more)

Click here to download the demo

This is a project that was done during 2004 after having read the paper "soft shadow volumes using penumbra wedges", and its optimized version "An optimized Soft Shadow Volume algorithm with realtime performance". Having started this site only recently, it was the occasion to release it. It uses Direct3D as the rendering API.

This sample only implements the case of a spherical light source. while the

original papers used 2 visibility buffers (one additive and one subtractive),

this sample improves the original algorithm by using a single v-buffer, and

using subtractive blending to account for negative values.

As a result some v-ram is saved, and some bandwidth is saved for the final compositing

shader as well. Moreover, the texture containing object coordinates are computed

in camera space, not in world. This results in increased accuracy for FP16 buffers,

as coordinates are more likely to be in the same small, delimited range.

You need a GPU supporting Pixel Shader 2.0 to run this program (ATI Radeon 9500 or more, nVidia Geforce FX or more)

Click here to download a demo of the thing!

Source code of the demo is also available.

External links :

Deferred Shaded Penumbra Wedges : An innovative DX10 recent soft shadow algorithm by Victor Coda. Very interesting to those wishing to go further!

Here's the PC demo named 'coaxial' we presented at the main party #2, and ranked #2 ! This demo was mostly a learning process, and aims to show nice sci-fi scenes.

System requirements :

Version history :

- Added a new GUI at start

- Added multisampling option

- Added 'no sound' option

- The initial engine was using lua for material animation scripting, but this was causing annoying freezes from times to times (due to the lua garbage collector). In this version all animated part of materials have been rewritten in C++, so the framerate is more steady.

- Initial party version

Downloads :

You can download the demo here

(version 1.1)

If you'd like to interactively fly through some scenes of the demo, some of

them are included here!

A 640x480 video in the avi format is available here

Credits :

Code & GFX : Nicolas Vizerie

Music : Lionel Vizerie

External links :